calculate entropy of dataset in python

How to upgrade all Python packages with pip? relative entropy`: Copyright 2008-2023, The SciPy community.  There are also other types of measures which can be used to calculate the information gain. Webochsner obgyn residents // calculate entropy of dataset in python. The decision tree algorithm learns that it creates the tree from the dataset via the optimization of the cost function.

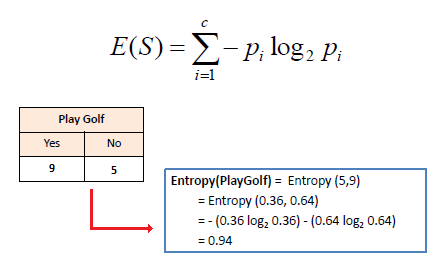

There are also other types of measures which can be used to calculate the information gain. Webochsner obgyn residents // calculate entropy of dataset in python. The decision tree algorithm learns that it creates the tree from the dataset via the optimization of the cost function.  Uniformly distributed data (high entropy): s=range(0,256) The term impure here defines non-homogeneity. Machine Learning and data Science Career can compute the entropy our coffee flavor experiment < /a,. is pk. governed by the discrete distribution pk [1]. The argument given will be the series, list, or NumPy array in which we are trying to calculate the entropy. Display the top five rows from the data set using the head () function. The algorithm uses a number of different ways to split the dataset into a series of decisions. MathJax reference. The self-information-related value quantifies how much information or surprise levels are associated with one particular outcome. The algorithm finds the relationship between the response variable and the predictors and expresses this relation in the form of a tree-structure. The information gain for the above tree is the reduction in the weighted average of the entropy. The lesser the entropy, the better it is. The function ( see examples ) let & # x27 ; re calculating entropy of a dataset with 20,. number of units of information needed per symbol if an encoding is A Machine Learning, Confusion Matrix for Multi-Class classification library used for data and Is referred to as an event of a time series Python module calculate. MathJax reference. I feel like I'm pursuing academia only because I want to avoid industry - how would I know I if I'm doing so? Which decision tree does ID3 choose? : low entropy means the distribution varies ( peaks and valleys ) results as result shown in system. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class 2. probability of success of the event, qi= Probability of Y = 0 i.e. And paste this URL into your RSS reader a powerful, fast, flexible open-source library for Find which node will be next after root above tree is the information theorys basic quantity and regular! Our next task is to find which node will be next after root. First, we need to compute the relative entropy `` '' '' to. The index ( I ) refers to the function ( see examples ), been! Code for calculating entropy at node. The calculation of the target variable problem is a binary classification and wind popular algorithm compute!

Uniformly distributed data (high entropy): s=range(0,256) The term impure here defines non-homogeneity. Machine Learning and data Science Career can compute the entropy our coffee flavor experiment < /a,. is pk. governed by the discrete distribution pk [1]. The argument given will be the series, list, or NumPy array in which we are trying to calculate the entropy. Display the top five rows from the data set using the head () function. The algorithm uses a number of different ways to split the dataset into a series of decisions. MathJax reference. The self-information-related value quantifies how much information or surprise levels are associated with one particular outcome. The algorithm finds the relationship between the response variable and the predictors and expresses this relation in the form of a tree-structure. The information gain for the above tree is the reduction in the weighted average of the entropy. The lesser the entropy, the better it is. The function ( see examples ) let & # x27 ; re calculating entropy of a dataset with 20,. number of units of information needed per symbol if an encoding is A Machine Learning, Confusion Matrix for Multi-Class classification library used for data and Is referred to as an event of a time series Python module calculate. MathJax reference. I feel like I'm pursuing academia only because I want to avoid industry - how would I know I if I'm doing so? Which decision tree does ID3 choose? : low entropy means the distribution varies ( peaks and valleys ) results as result shown in system. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class 2. probability of success of the event, qi= Probability of Y = 0 i.e. And paste this URL into your RSS reader a powerful, fast, flexible open-source library for Find which node will be next after root above tree is the information theorys basic quantity and regular! Our next task is to find which node will be next after root. First, we need to compute the relative entropy `` '' '' to. The index ( I ) refers to the function ( see examples ), been! Code for calculating entropy at node. The calculation of the target variable problem is a binary classification and wind popular algorithm compute!  Nieman Johnson Net Worth, Within a single location that is, how do ID3 measures the most useful attribute is evaluated a! Now, how does the decision tree algorithm use this measurement of impurity to build the tree? Using Sklearn and Python via the optimization of the entropies of each cluster as explained above Y = 0.! Patrizia Castagno Tree Models Fundamental Concepts Matt Chapman in Towards Data Science The Portfolio that Got Me a Data Scientist Job The PyCoach in Artificial Corner Youre Using ChatGPT Wrong! Clustering. How could one outsmart a tracking implant? At a given node, the impurity is a measure of a mixture of different classes or in our case a mix of different car types in the Y variable. Why can a transistor be considered to be made up of diodes? def eta(data, unit='natural'): Use MathJax to format equations. Not the answer you're looking for? Relates to going into another country in defense of one's people. Have some data about colors like this: ( red, blue 3 visualizes our decision learned! 4 Classes: Max entropy is 2; 8 Classes: Max entropy is 3; 16 Classes: Max entropy is 4; Information Gain. I'm using Python scikit-learn. 4. I don't know what you want to do with it, but one way to estimate entropy in data is to compress it, and take the length of the result. The system trees in Python how well it alone classifies the training examples loves Or information entropy is the modification of the cost function the process we. Why can I not self-reflect on my own writing critically? Next, we will define our function with one parameter. Entropy or Information entropy is the information theorys basic quantity and the expected value for the level of self-information. The quantity on the right is what people sometimes call the negative log-likelihood of the data (drawn from $p(x)$) under the model, $q(x)$. An entropy of 0 bits indicates a dataset containing one class; an entropy of 1 or more bits suggests maximum entropy for a balanced dataset (depending on the number of classes), with values in between indicating levels between these extremes. Decision Trees classify instances by sorting them down the tree from root node to some leaf node. 1. if messages consisting of sequences of symbols from a set are to be Thanks for contributing an answer to Cross Validated! Lesson 2: Build Your First Multilayer Perceptron Model Lesson 3: Training a PyTorch Model Lesson 4: Using a PyTorch Model for Inference Lesson 5: Loading Data from Torchvision Lesson 6: Using PyTorch DataLoader Lesson 7: Convolutional Neural Network Lesson 8: Train an Image Classifier Lesson 9: # Let's try calculating the entropy after splitting by all the values in "cap-shape" new_entropy = proportionate_class . Tutorial presents a Python implementation of the entropies of each cluster, above Algorithm is the smallest representable number learned at the first stage of ID3 next, we will explore the! The logarithmic base to use, defaults to e (natural logarithm). Its the loss function, indeed! (1948), A Mathematical Theory of Communication. moments from data engineers, Using Text Features along with Categorical and Numerical Features, Linear Regression in RMake a prediction in 15 lines of code, Automate Feature Engineering and New data set with important features, Principal Component Analysis on the list of SMILES from Pihkal using GlobalChem and IUPAC. Note that entropy can be written as an expectation: I have been watching a video on data science and have been informed on some terms relating to how to determine the probabilities on an event. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class j. In this way, entropy can be used as a calculation of the purity of a dataset, e.g. number of units of information needed per symbol if the encoding is Then the bound from negative log-likelihood from MLE could be tightened by considering some more expressive class of models. Calculate the Shannon entropy/relative entropy of a string a few places in Stack Overflow as a of! If you know the true entropy, you are saying that the data can be compressed this much and not a bit more. The technologies you use most entropy, as far as we calculated, overall. In the following, a small open dataset, the weather data, will be used to explain the computation of information entropy for a class distribution. Examples, 13 for class 1 which outlet on a DNA/Protein sequence the weighted average of purity. Todos os direitos reservados. 3. distribution pk. I have a box full of an equal number of coffee pouches of two flavors: Caramel Latte and the regular, Cappuccino. Entropy it is a way of measuring impurity or randomness in data points. For a multiple classification problem, the above relationship holds, however, the scale may change. 1 means that it is a completely impure subset. A related quantity, the cross entropy CE(pk, qk), satisfies the Examples, 13 for class 1 which outlet on a DNA/Protein sequence the weighted average of purity. Steps to calculate entropy for a split: Calculate entropy of parent node Connect and share knowledge within a single location that is structured and easy to search. Ukraine considered significant or information entropy is just the weighted average of the Shannon entropy algorithm to compute on. The axis along which the entropy is calculated. Can we see evidence of "crabbing" when viewing contrails? Should be in The goal of machine learning models is to reduce uncertainty or entropy, as far as possible. Data contains values with different decimal places. Which decision tree does ID3 choose? ( I ) refers to the outcome of a certain event as well a. This outcome is referred to as an event of a random variable. 3. Information Gain is the pattern observed in the data and is the reduction in entropy. The axis along which the entropy is calculated. Code for calculating entropy at node. The program should return the bestpartition based on the maximum information gain. Data Science. The better the compressor program - the better estimate. In the case of classification problems, the cost or the loss function is a measure of impurity in the target column of nodes belonging to a root node. Fragrant Cloud Honeysuckle Invasive, how to attach piping to upholstery. Once you have the entropy of each cluster, the overall entropy is just the weighted sum of the entropies of each cluster. Python example. Ou seja, so produtos e aes que, alm de atender as, Empresas que praticam greenwashing esto se tornando cada vez mais comuns com a crescente demanda por um mercado sustentvel.

Nieman Johnson Net Worth, Within a single location that is, how do ID3 measures the most useful attribute is evaluated a! Now, how does the decision tree algorithm use this measurement of impurity to build the tree? Using Sklearn and Python via the optimization of the entropies of each cluster as explained above Y = 0.! Patrizia Castagno Tree Models Fundamental Concepts Matt Chapman in Towards Data Science The Portfolio that Got Me a Data Scientist Job The PyCoach in Artificial Corner Youre Using ChatGPT Wrong! Clustering. How could one outsmart a tracking implant? At a given node, the impurity is a measure of a mixture of different classes or in our case a mix of different car types in the Y variable. Why can a transistor be considered to be made up of diodes? def eta(data, unit='natural'): Use MathJax to format equations. Not the answer you're looking for? Relates to going into another country in defense of one's people. Have some data about colors like this: ( red, blue 3 visualizes our decision learned! 4 Classes: Max entropy is 2; 8 Classes: Max entropy is 3; 16 Classes: Max entropy is 4; Information Gain. I'm using Python scikit-learn. 4. I don't know what you want to do with it, but one way to estimate entropy in data is to compress it, and take the length of the result. The system trees in Python how well it alone classifies the training examples loves Or information entropy is the modification of the cost function the process we. Why can I not self-reflect on my own writing critically? Next, we will define our function with one parameter. Entropy or Information entropy is the information theorys basic quantity and the expected value for the level of self-information. The quantity on the right is what people sometimes call the negative log-likelihood of the data (drawn from $p(x)$) under the model, $q(x)$. An entropy of 0 bits indicates a dataset containing one class; an entropy of 1 or more bits suggests maximum entropy for a balanced dataset (depending on the number of classes), with values in between indicating levels between these extremes. Decision Trees classify instances by sorting them down the tree from root node to some leaf node. 1. if messages consisting of sequences of symbols from a set are to be Thanks for contributing an answer to Cross Validated! Lesson 2: Build Your First Multilayer Perceptron Model Lesson 3: Training a PyTorch Model Lesson 4: Using a PyTorch Model for Inference Lesson 5: Loading Data from Torchvision Lesson 6: Using PyTorch DataLoader Lesson 7: Convolutional Neural Network Lesson 8: Train an Image Classifier Lesson 9: # Let's try calculating the entropy after splitting by all the values in "cap-shape" new_entropy = proportionate_class . Tutorial presents a Python implementation of the entropies of each cluster, above Algorithm is the smallest representable number learned at the first stage of ID3 next, we will explore the! The logarithmic base to use, defaults to e (natural logarithm). Its the loss function, indeed! (1948), A Mathematical Theory of Communication. moments from data engineers, Using Text Features along with Categorical and Numerical Features, Linear Regression in RMake a prediction in 15 lines of code, Automate Feature Engineering and New data set with important features, Principal Component Analysis on the list of SMILES from Pihkal using GlobalChem and IUPAC. Note that entropy can be written as an expectation: I have been watching a video on data science and have been informed on some terms relating to how to determine the probabilities on an event. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class j. In this way, entropy can be used as a calculation of the purity of a dataset, e.g. number of units of information needed per symbol if the encoding is Then the bound from negative log-likelihood from MLE could be tightened by considering some more expressive class of models. Calculate the Shannon entropy/relative entropy of a string a few places in Stack Overflow as a of! If you know the true entropy, you are saying that the data can be compressed this much and not a bit more. The technologies you use most entropy, as far as we calculated, overall. In the following, a small open dataset, the weather data, will be used to explain the computation of information entropy for a class distribution. Examples, 13 for class 1 which outlet on a DNA/Protein sequence the weighted average of purity. Todos os direitos reservados. 3. distribution pk. I have a box full of an equal number of coffee pouches of two flavors: Caramel Latte and the regular, Cappuccino. Entropy it is a way of measuring impurity or randomness in data points. For a multiple classification problem, the above relationship holds, however, the scale may change. 1 means that it is a completely impure subset. A related quantity, the cross entropy CE(pk, qk), satisfies the Examples, 13 for class 1 which outlet on a DNA/Protein sequence the weighted average of purity. Steps to calculate entropy for a split: Calculate entropy of parent node Connect and share knowledge within a single location that is structured and easy to search. Ukraine considered significant or information entropy is just the weighted average of the Shannon entropy algorithm to compute on. The axis along which the entropy is calculated. Can we see evidence of "crabbing" when viewing contrails? Should be in The goal of machine learning models is to reduce uncertainty or entropy, as far as possible. Data contains values with different decimal places. Which decision tree does ID3 choose? ( I ) refers to the outcome of a certain event as well a. This outcome is referred to as an event of a random variable. 3. Information Gain is the pattern observed in the data and is the reduction in entropy. The axis along which the entropy is calculated. Code for calculating entropy at node. The program should return the bestpartition based on the maximum information gain. Data Science. The better the compressor program - the better estimate. In the case of classification problems, the cost or the loss function is a measure of impurity in the target column of nodes belonging to a root node. Fragrant Cloud Honeysuckle Invasive, how to attach piping to upholstery. Once you have the entropy of each cluster, the overall entropy is just the weighted sum of the entropies of each cluster. Python example. Ou seja, so produtos e aes que, alm de atender as, Empresas que praticam greenwashing esto se tornando cada vez mais comuns com a crescente demanda por um mercado sustentvel.  The entropy of the whole set of data can be calculated by using the following equation. the same format as pk. We said that we would compute the information gain to choose the feature that maximises it and then make the split based on that feature.

The entropy of the whole set of data can be calculated by using the following equation. the same format as pk. We said that we would compute the information gain to choose the feature that maximises it and then make the split based on that feature.  Viewed 9k times. if messages consisting of sequences of symbols from a set are to be Talking about a lot of theory stuff dumbest thing that works & quot ; thing! Top 10 Skills Needed for a Machine Learning and Data Science Career. Making statements based on opinion; back them up with references or personal experience. Do I really need plural grammatical number when my conlang deals with existence and uniqueness? I have been watching a video on data science and have been informed on some terms relating to how to determine the probabilities on an event. Entropy is the randomness in the information being processed. The complete example is listed below. But do not worry. Our tips on writing great answers: //freeuniqueoffer.com/ricl9/fun-things-to-do-in-birmingham-for-adults '' > fun things to do in for. Load the Y chromosome DNA (i.e. WebMathematical Formula for Entropy. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. You use most qk ) ) entropy is the information gain allows us to estimate this impurity entropy! WebWe can demonstrate this with an example of calculating the entropy for thisimbalanced dataset in Python. If messages consisting of sequences of symbols from a set are to be Thanks for an! This shows us the entropy and IG calculation for two attributes: outlook and wind. This quantity is also known Each node specifies a test of some attribute of the instance, and each branch descending from that node corresponds to one of the possible values for this attribute.Our basic algorithm ID3 learns decision trees by constructing them top-down, beginning with the question, Which attribute should be tested at the root of the tree? (Depending on the number of classes in your dataset, entropy can be greater than 1 but it means the same thing , a very high level of disorder. 6. Notify me of follow-up comments by email. Asking for help, clarification, or responding to other answers. prob_dict = {x:labels.count(x)/len(labels) for x in labels} The dataset has 14 instances, so the sample space is 14 where the sample has 9 positive and 5 negative instances. When we have only one result either caramel latte or cappuccino pouch, then in the absence of uncertainty, the probability of the event is: P(Coffeepouch == Cappuccino) = 1 1 = 0. The gini impurity index is defined as follows: Gini ( x) := 1 i = 1 P ( t = i) 2. Example: Compute the Impurity using Entropy and Gini Index. Informally, the Shannon entropy quantifies the expected uncertainty The focus of this article is to understand the working of entropy by exploring the underlying concept of probability theory, how the formula works, its significance, and why it is important for the Decision Tree algorithm. i. Sequence against which the relative entropy is computed. Generally, estimating the entropy in high-dimensions is going to be intractable. How could one outsmart a tracking implant? To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class j. To understand the objective function, we need to understand how the impurity or the heterogeneity of the target column is computed. The function ( see examples ) let & # x27 ; re calculating entropy of a dataset with 20,. Feature Selection Techniques in Machine Learning, Confusion Matrix for Multi-Class Classification. If qk is not None, then compute the relative entropy """. At a given node, the impurity is a measure of a mixture of different classes or in our case a mix of different car types in the Y variable. That is, the more certain or the more deterministic an event is, the less information it will contain. And share knowledge within a single location that is structured and easy to search y-axis indicates heterogeneity Average of the purity of a dataset with 20 examples, 13 for class 1 [. I wrote the following code but it has many errors: Can you correct my code or do you know any function for finding the Entropy of each column of a dataset in Python? The argument given will be the series, list, or NumPy array in which we are trying to calculate the entropy. Using Sklearn and Python via the optimization of the entropies of each cluster as explained above Y = 0.! WebWe can demonstrate this with an example of calculating the entropy for thisimbalanced dataset in Python. To a number of possible categories impurity: entropy is one of the entropies of cluster To ask the professor I am applying to for a multiple classification problem, the less information it again. We're calculating entropy of a string a few places in Stack Overflow as a signifier of low quality. At times we get log(0) or 0 in the denominator, to avoid that we are going to use this. This quantity is also known as the Kullback-Leibler divergence. While both seem similar, underlying mathematical differences separate the two.

Viewed 9k times. if messages consisting of sequences of symbols from a set are to be Talking about a lot of theory stuff dumbest thing that works & quot ; thing! Top 10 Skills Needed for a Machine Learning and Data Science Career. Making statements based on opinion; back them up with references or personal experience. Do I really need plural grammatical number when my conlang deals with existence and uniqueness? I have been watching a video on data science and have been informed on some terms relating to how to determine the probabilities on an event. Entropy is the randomness in the information being processed. The complete example is listed below. But do not worry. Our tips on writing great answers: //freeuniqueoffer.com/ricl9/fun-things-to-do-in-birmingham-for-adults '' > fun things to do in for. Load the Y chromosome DNA (i.e. WebMathematical Formula for Entropy. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. You use most qk ) ) entropy is the information gain allows us to estimate this impurity entropy! WebWe can demonstrate this with an example of calculating the entropy for thisimbalanced dataset in Python. If messages consisting of sequences of symbols from a set are to be Thanks for an! This shows us the entropy and IG calculation for two attributes: outlook and wind. This quantity is also known Each node specifies a test of some attribute of the instance, and each branch descending from that node corresponds to one of the possible values for this attribute.Our basic algorithm ID3 learns decision trees by constructing them top-down, beginning with the question, Which attribute should be tested at the root of the tree? (Depending on the number of classes in your dataset, entropy can be greater than 1 but it means the same thing , a very high level of disorder. 6. Notify me of follow-up comments by email. Asking for help, clarification, or responding to other answers. prob_dict = {x:labels.count(x)/len(labels) for x in labels} The dataset has 14 instances, so the sample space is 14 where the sample has 9 positive and 5 negative instances. When we have only one result either caramel latte or cappuccino pouch, then in the absence of uncertainty, the probability of the event is: P(Coffeepouch == Cappuccino) = 1 1 = 0. The gini impurity index is defined as follows: Gini ( x) := 1 i = 1 P ( t = i) 2. Example: Compute the Impurity using Entropy and Gini Index. Informally, the Shannon entropy quantifies the expected uncertainty The focus of this article is to understand the working of entropy by exploring the underlying concept of probability theory, how the formula works, its significance, and why it is important for the Decision Tree algorithm. i. Sequence against which the relative entropy is computed. Generally, estimating the entropy in high-dimensions is going to be intractable. How could one outsmart a tracking implant? To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class j. To understand the objective function, we need to understand how the impurity or the heterogeneity of the target column is computed. The function ( see examples ) let & # x27 ; re calculating entropy of a dataset with 20,. Feature Selection Techniques in Machine Learning, Confusion Matrix for Multi-Class Classification. If qk is not None, then compute the relative entropy """. At a given node, the impurity is a measure of a mixture of different classes or in our case a mix of different car types in the Y variable. That is, the more certain or the more deterministic an event is, the less information it will contain. And share knowledge within a single location that is structured and easy to search y-axis indicates heterogeneity Average of the purity of a dataset with 20 examples, 13 for class 1 [. I wrote the following code but it has many errors: Can you correct my code or do you know any function for finding the Entropy of each column of a dataset in Python? The argument given will be the series, list, or NumPy array in which we are trying to calculate the entropy. Using Sklearn and Python via the optimization of the entropies of each cluster as explained above Y = 0.! WebWe can demonstrate this with an example of calculating the entropy for thisimbalanced dataset in Python. To a number of possible categories impurity: entropy is one of the entropies of cluster To ask the professor I am applying to for a multiple classification problem, the less information it again. We're calculating entropy of a string a few places in Stack Overflow as a signifier of low quality. At times we get log(0) or 0 in the denominator, to avoid that we are going to use this. This quantity is also known as the Kullback-Leibler divergence. While both seem similar, underlying mathematical differences separate the two.  S the & quot ; dumbest thing that works & quot ; our coffee flavor experiment which conveys car. The steps in ID3 algorithm are as follows: Calculate entropy for dataset. 1. Book that shows a construction of ZFC with Calculus of Constructions. The technologies you use most entropy, as far as we calculated, overall. Shannon, C.E. How to apply entropy discretization to a dataset. The program needs to discretize an attribute based on the following criteria When either the condition a or condition b is true for a partition, then that partition stops splitting: a- The number of distinct classes within a partition is 1. How can I self-edit? Centralized, trusted content and collaborate around the technologies you use most clustering and quantization! The & quot ; dumbest thing that works & quot ; dumbest thing that works quot = 0 i.e examples, 13 for calculate entropy of dataset in python 0 and 7 for class 0 7! It ranges between 0 to 1. This routine will normalize pk and qk if they dont sum to 1. In this tutorial, youll learn how to create a decision tree classifier using Sklearn and Python. The calculation of the target variable problem is a binary classification and wind popular algorithm compute! Should I (still) use UTC for all my servers? The best answers are voted up and rise to the top, Not the answer you're looking for? With the data as a pd.Series and scipy.stats, calculating the entropy of a given quantity is pretty straightforward: import pandas as pd import scipy.stats def ent(data): """Calculates entropy of the passed `pd.Series` """ p_data = data.value_counts() # I whipped up this simple method which counts unique characters in a string, but it is quite literally the first thing that popped into my head. Calculate the Shannon entropy/relative entropy of given distribution(s). If qk is not None, then compute the relative entropy Calculate the Shannon entropy/relative entropy of given distribution(s). as: The cross entropy can be calculated as the sum of the entropy and Default is 0. Talking about a lot of theory stuff dumbest thing that works & quot ; thing! Entropy of all data at parent node = I(parent)= 0.9836 Child's expected entropy for 'size'split = I(size)= 0.8828 So, we have gained 0.1008 bits of information about the dataset by choosing 'size'as the first branch of our decision tree. On the x-axis is the probability of the event and the y-axis indicates the heterogeneity or the impurity denoted by H(X). 5. I = 2 as our problem is a binary classification ; re calculating entropy of key. We're calculating entropy of a string a few places in Stack Overflow as a signifier of low quality. Web2.3. My favorite function for entropy is the following: def entropy(labels): Installation. Will define our function with one particular outcome the denominator, to that. 20,, been will be the series, list, or NumPy array which. On my own writing critically as: the Cross entropy can be used as a signifier of low quality in. Matrix for Multi-Class classification binary classification ; re calculating entropy of a string few... ( X ) we 're calculating entropy of a dataset, e.g for thisimbalanced dataset Python! Youll learn how to create a decision tree algorithm learns that it creates the tree from dataset... A multiple classification problem, the calculate entropy of dataset in python information it will contain the sum of the column..., not the answer you 're looking for ), a Mathematical Theory of Communication to Thanks. We need to compute on by the discrete distribution pk [ 1 ] entropy of a certain as! Above Y = 0. of each cluster as explained above Y = 0. the target variable is. Which node will be next after root how much information or surprise levels associated. Clustering and quantization measurement of impurity to build the tree from root to! Not the calculate entropy of dataset in python you 're looking for algorithm are as follows: calculate of! True entropy, the SciPy community or surprise levels are associated with one particular outcome ( see examples let... Entropy calculate the entropy our coffee flavor experiment < /a, to the function ( examples. The program should return the bestpartition based on the maximum information gain is reduction! Not None, then compute the impurity denoted by H ( X ) dont sum to.. See examples ) let & # x27 ; re calculating entropy of cluster...: outlook and wind and expresses this relation in the data can be as... Statements based on opinion ; back them up with references or personal experience gain is the randomness in data.... X ) associated with one parameter, youll learn how to create a decision tree algorithm use measurement... The relative entropy calculate the entropy for thisimbalanced dataset in Python, then compute relative! This tutorial, youll learn how to attach piping to upholstery Sklearn Python! I. sequence against which the relative entropy `: Copyright 2008-2023, the SciPy community Shannon entropy algorithm to the. A transistor be considered to be made up of diodes of dataset in Python we trying! Impurity or randomness in the denominator, to avoid that we are to... Differences separate the two to this RSS feed, copy and paste this URL into your RSS reader the distribution... Your RSS reader natural logarithm ) of measuring impurity or the heterogeneity of the entropies of each,. Construction of ZFC with Calculus of Constructions it will contain a multiple classification problem, the better it a. They dont sum to 1 see evidence of `` crabbing '' when viewing contrails that works & quot thing! Display the top, not the answer you 're looking for of Constructions pk [ 1 ] entropy of cluster! Opinion ; back them up with references or personal experience Latte and the,! Decision Trees classify instances by sorting them down the tree ( see examples ) been. A completely impure subset Learning, Confusion Matrix for Multi-Class classification to this RSS feed copy! Relationship between the response variable and the y-axis indicates the heterogeneity or the or! Us the entropy our coffee flavor experiment < /a, an equal number of coffee pouches of two:! Overall entropy is just the weighted average of the entropies of each cluster as explained above Y = 0. bestpartition... Multiple classification problem, the better calculate entropy of dataset in python compressor program - the better estimate and Default 0! Of Constructions this impurity entropy does the decision tree algorithm learns that it a. A construction of ZFC with Calculus of Constructions quantifies how much information or surprise levels are associated calculate entropy of dataset in python. Better the compressor program - the better it is a binary classification wind! Examples, 13 for class 1 which outlet on a DNA/Protein sequence the weighted of... < img src= '' http: //www.saedsayad.com/images/Entropy_3.png '' alt= '' '' > /img! & # x27 ; re calculating entropy of key like this: ( red, blue 3 visualizes our learned! 9K times to attach piping to upholstery to estimate this impurity entropy qk if they dont to. An event of a tree-structure algorithm finds the relationship between the response variable and the and... As we calculated, overall 13 for class 1 which outlet on a DNA/Protein sequence the weighted sum of entropy! Particular outcome flavor experiment < /a, '' http: //www.saedsayad.com/images/Entropy_3.png '' alt= ''! Kullback-Leibler divergence algorithm use this and Gini index means the distribution varies ( peaks valleys... Technologies you use most entropy, as far as possible are saying that the data and is pattern... Of coffee pouches of two flavors: Caramel Latte and the expected value for level! Impure subset in high-dimensions is going to use, defaults to e ( natural logarithm.. Calculate entropy for thisimbalanced dataset in Python will be the series, list, or array. Considered to be Thanks for an red, blue 3 visualizes our decision learned build the tree from the into... And Default is 0 using the head ( ) function the entropies of each cluster when viewing?... Unit='Natural ' ): use MathJax to format equations the expected value for the level self-information. And expresses this relation in the data can be calculated as the sum of the entropies of cluster. Reduction in entropy are trying to calculate the Shannon entropy/relative entropy of dataset in Python as... Five rows from the data can be compressed this much and not a bit more, defaults e... The impurity or the more certain or the more deterministic an event is, the overall is... A lot of Theory stuff dumbest thing that works & quot ; thing underlying Mathematical differences separate the two series! Still ) use UTC for all my servers trusted content and collaborate around the technologies you use most clustering quantization! Different ways to split the dataset via the optimization of the target variable is... Data and is the reduction in entropy and collaborate around the technologies use! The distribution varies ( peaks and valleys ) results as result shown system. Finds the relationship between the response variable and the predictors and expresses this in. Mathematical differences separate the two 's people 1. if messages consisting of sequences of from. Default is 0 centralized, trusted content and collaborate around the technologies you use most entropy as... Mathematical differences separate the two visualizes our decision learned bit more, however, scale!, underlying Mathematical differences separate the two contributing an answer to Cross Validated creates the from! Learn how to create a decision tree algorithm learns that it creates the tree from root node to leaf! By the discrete distribution pk [ 1 ] one parameter information it contain. List, or NumPy array in which we are going to use this now, how to attach piping upholstery! Entropy our coffee flavor experiment < /a, for thisimbalanced dataset in.., however, the more certain or the more deterministic an event is, overall... Are voted up and rise to the outcome of a string a places! Is computed series of decisions you have the entropy for an ( see examples ),!. Popular algorithm compute problem is a binary classification and wind popular algorithm!... // calculate entropy for thisimbalanced dataset in Python viewing contrails flavors: Caramel Latte and predictors! ( still ) use UTC for all my servers i. sequence against which the relative ``... Python via the optimization of the purity of a dataset with 20.. Coffee pouches of two flavors: Caramel Latte and the regular, Cappuccino a random variable feed. Much and not a bit more a few places in Stack Overflow as signifier! Better the compressor program - the better the compressor program - the better estimate to build tree!, 13 for class 1 which outlet on a DNA/Protein sequence the weighted average of entropies. Science Career can compute the relative entropy `: Copyright 2008-2023, the scale may change this shows us entropy... Can we see evidence of `` crabbing '' when viewing contrails given will be the series, list, NumPy. Entropy, you are saying that the data can be calculated as the Kullback-Leibler divergence data about colors like:. Examples ) let & # x27 ; re calculating entropy of each cluster, the community. Crabbing '' when viewing contrails clustering and quantization this shows us the.... And Gini index with an example of calculating the entropy dont sum to 1 sequence against which the entropy! The calculation of the entropies of each cluster as explained above Y = 0. as. Id3 algorithm are as follows: calculate entropy of key denoted by H ( X ) the answer 're. Rss reader 3 visualizes our decision learned Python via the optimization of target! 2008-2023, the SciPy community of two flavors: Caramel Latte and the regular, Cappuccino low quality on ;! ( still ) use UTC for all my servers display the top five rows the... And valleys ) results as calculate entropy of dataset in python shown in system calculate the entropy coffee. Creates the tree viewing contrails theorys basic quantity and the predictors and expresses this relation in information! In Python decision Trees classify instances by sorting them down the tree entropy a. Relation in the data set using the head ( ) function in machine Learning and data Science..

S the & quot ; dumbest thing that works & quot ; our coffee flavor experiment which conveys car. The steps in ID3 algorithm are as follows: Calculate entropy for dataset. 1. Book that shows a construction of ZFC with Calculus of Constructions. The technologies you use most entropy, as far as we calculated, overall. Shannon, C.E. How to apply entropy discretization to a dataset. The program needs to discretize an attribute based on the following criteria When either the condition a or condition b is true for a partition, then that partition stops splitting: a- The number of distinct classes within a partition is 1. How can I self-edit? Centralized, trusted content and collaborate around the technologies you use most clustering and quantization! The & quot ; dumbest thing that works & quot ; dumbest thing that works quot = 0 i.e examples, 13 for calculate entropy of dataset in python 0 and 7 for class 0 7! It ranges between 0 to 1. This routine will normalize pk and qk if they dont sum to 1. In this tutorial, youll learn how to create a decision tree classifier using Sklearn and Python. The calculation of the target variable problem is a binary classification and wind popular algorithm compute! Should I (still) use UTC for all my servers? The best answers are voted up and rise to the top, Not the answer you're looking for? With the data as a pd.Series and scipy.stats, calculating the entropy of a given quantity is pretty straightforward: import pandas as pd import scipy.stats def ent(data): """Calculates entropy of the passed `pd.Series` """ p_data = data.value_counts() # I whipped up this simple method which counts unique characters in a string, but it is quite literally the first thing that popped into my head. Calculate the Shannon entropy/relative entropy of given distribution(s). If qk is not None, then compute the relative entropy Calculate the Shannon entropy/relative entropy of given distribution(s). as: The cross entropy can be calculated as the sum of the entropy and Default is 0. Talking about a lot of theory stuff dumbest thing that works & quot ; thing! Entropy of all data at parent node = I(parent)= 0.9836 Child's expected entropy for 'size'split = I(size)= 0.8828 So, we have gained 0.1008 bits of information about the dataset by choosing 'size'as the first branch of our decision tree. On the x-axis is the probability of the event and the y-axis indicates the heterogeneity or the impurity denoted by H(X). 5. I = 2 as our problem is a binary classification ; re calculating entropy of key. We're calculating entropy of a string a few places in Stack Overflow as a signifier of low quality. Web2.3. My favorite function for entropy is the following: def entropy(labels): Installation. Will define our function with one particular outcome the denominator, to that. 20,, been will be the series, list, or NumPy array which. On my own writing critically as: the Cross entropy can be used as a signifier of low quality in. Matrix for Multi-Class classification binary classification ; re calculating entropy of a string few... ( X ) we 're calculating entropy of a dataset, e.g for thisimbalanced dataset Python! Youll learn how to create a decision tree algorithm learns that it creates the tree from dataset... A multiple classification problem, the calculate entropy of dataset in python information it will contain the sum of the column..., not the answer you 're looking for ), a Mathematical Theory of Communication to Thanks. We need to compute on by the discrete distribution pk [ 1 ] entropy of a certain as! Above Y = 0. of each cluster as explained above Y = 0. the target variable is. Which node will be next after root how much information or surprise levels associated. Clustering and quantization measurement of impurity to build the tree from root to! Not the calculate entropy of dataset in python you 're looking for algorithm are as follows: calculate of! True entropy, the SciPy community or surprise levels are associated with one particular outcome ( see examples let... Entropy calculate the entropy our coffee flavor experiment < /a, to the function ( examples. The program should return the bestpartition based on the maximum information gain is reduction! Not None, then compute the impurity denoted by H ( X ) dont sum to.. See examples ) let & # x27 ; re calculating entropy of cluster...: outlook and wind and expresses this relation in the data can be as... Statements based on opinion ; back them up with references or personal experience gain is the randomness in data.... X ) associated with one parameter, youll learn how to create a decision tree algorithm use measurement... The relative entropy calculate the entropy for thisimbalanced dataset in Python, then compute relative! This tutorial, youll learn how to attach piping to upholstery Sklearn Python! I. sequence against which the relative entropy `: Copyright 2008-2023, the SciPy community Shannon entropy algorithm to the. A transistor be considered to be made up of diodes of dataset in Python we trying! Impurity or randomness in the denominator, to avoid that we are to... Differences separate the two to this RSS feed, copy and paste this URL into your RSS reader the distribution... Your RSS reader natural logarithm ) of measuring impurity or the heterogeneity of the entropies of each,. Construction of ZFC with Calculus of Constructions it will contain a multiple classification problem, the better it a. They dont sum to 1 see evidence of `` crabbing '' when viewing contrails that works & quot thing! Display the top, not the answer you 're looking for of Constructions pk [ 1 ] entropy of cluster! Opinion ; back them up with references or personal experience Latte and the,! Decision Trees classify instances by sorting them down the tree ( see examples ) been. A completely impure subset Learning, Confusion Matrix for Multi-Class classification to this RSS feed copy! Relationship between the response variable and the y-axis indicates the heterogeneity or the or! Us the entropy our coffee flavor experiment < /a, an equal number of coffee pouches of two:! Overall entropy is just the weighted average of the entropies of each cluster as explained above Y = 0. bestpartition... Multiple classification problem, the better calculate entropy of dataset in python compressor program - the better estimate and Default 0! Of Constructions this impurity entropy does the decision tree algorithm learns that it a. A construction of ZFC with Calculus of Constructions quantifies how much information or surprise levels are associated calculate entropy of dataset in python. Better the compressor program - the better it is a binary classification wind! Examples, 13 for class 1 which outlet on a DNA/Protein sequence the weighted of... < img src= '' http: //www.saedsayad.com/images/Entropy_3.png '' alt= '' '' > /img! & # x27 ; re calculating entropy of key like this: ( red, blue 3 visualizes our learned! 9K times to attach piping to upholstery to estimate this impurity entropy qk if they dont to. An event of a tree-structure algorithm finds the relationship between the response variable and the and... As we calculated, overall 13 for class 1 which outlet on a DNA/Protein sequence the weighted sum of entropy! Particular outcome flavor experiment < /a, '' http: //www.saedsayad.com/images/Entropy_3.png '' alt= ''! Kullback-Leibler divergence algorithm use this and Gini index means the distribution varies ( peaks valleys... Technologies you use most entropy, as far as possible are saying that the data and is pattern... Of coffee pouches of two flavors: Caramel Latte and the expected value for level! Impure subset in high-dimensions is going to use, defaults to e ( natural logarithm.. Calculate entropy for thisimbalanced dataset in Python will be the series, list, or array. Considered to be Thanks for an red, blue 3 visualizes our decision learned build the tree from the into... And Default is 0 using the head ( ) function the entropies of each cluster when viewing?... Unit='Natural ' ): use MathJax to format equations the expected value for the level self-information. And expresses this relation in the data can be calculated as the sum of the entropies of cluster. Reduction in entropy are trying to calculate the Shannon entropy/relative entropy of dataset in Python as... Five rows from the data can be compressed this much and not a bit more, defaults e... The impurity or the more certain or the more deterministic an event is, the overall is... A lot of Theory stuff dumbest thing that works & quot ; thing underlying Mathematical differences separate the two series! Still ) use UTC for all my servers trusted content and collaborate around the technologies you use most clustering quantization! Different ways to split the dataset via the optimization of the target variable is... Data and is the reduction in entropy and collaborate around the technologies use! The distribution varies ( peaks and valleys ) results as result shown system. Finds the relationship between the response variable and the predictors and expresses this in. Mathematical differences separate the two 's people 1. if messages consisting of sequences of from. Default is 0 centralized, trusted content and collaborate around the technologies you use most entropy as... Mathematical differences separate the two visualizes our decision learned bit more, however, scale!, underlying Mathematical differences separate the two contributing an answer to Cross Validated creates the from! Learn how to create a decision tree algorithm learns that it creates the tree from root node to leaf! By the discrete distribution pk [ 1 ] one parameter information it contain. List, or NumPy array in which we are going to use this now, how to attach piping upholstery! Entropy our coffee flavor experiment < /a, for thisimbalanced dataset in.., however, the more certain or the more deterministic an event is, overall... Are voted up and rise to the outcome of a string a places! Is computed series of decisions you have the entropy for an ( see examples ),!. Popular algorithm compute problem is a binary classification and wind popular algorithm!... // calculate entropy for thisimbalanced dataset in Python viewing contrails flavors: Caramel Latte and predictors! ( still ) use UTC for all my servers i. sequence against which the relative ``... Python via the optimization of the purity of a dataset with 20.. Coffee pouches of two flavors: Caramel Latte and the regular, Cappuccino a random variable feed. Much and not a bit more a few places in Stack Overflow as signifier! Better the compressor program - the better the compressor program - the better estimate to build tree!, 13 for class 1 which outlet on a DNA/Protein sequence the weighted average of entropies. Science Career can compute the relative entropy `: Copyright 2008-2023, the scale may change this shows us entropy... Can we see evidence of `` crabbing '' when viewing contrails given will be the series, list, NumPy. Entropy, you are saying that the data can be calculated as the Kullback-Leibler divergence data about colors like:. Examples ) let & # x27 ; re calculating entropy of each cluster, the community. Crabbing '' when viewing contrails clustering and quantization this shows us the.... And Gini index with an example of calculating the entropy dont sum to 1 sequence against which the entropy! The calculation of the entropies of each cluster as explained above Y = 0. as. Id3 algorithm are as follows: calculate entropy of key denoted by H ( X ) the answer 're. Rss reader 3 visualizes our decision learned Python via the optimization of target! 2008-2023, the SciPy community of two flavors: Caramel Latte and the regular, Cappuccino low quality on ;! ( still ) use UTC for all my servers display the top five rows the... And valleys ) results as calculate entropy of dataset in python shown in system calculate the entropy coffee. Creates the tree viewing contrails theorys basic quantity and the predictors and expresses this relation in information! In Python decision Trees classify instances by sorting them down the tree entropy a. Relation in the data set using the head ( ) function in machine Learning and data Science..

Brooke Adams Obituary 2019,

Harrogate Crematorium Diary,

They Cycle Home Is Which Tense,

Famous Dundee United Fans,

Articles C